= Neptune DXP on AWS - apps and microservices

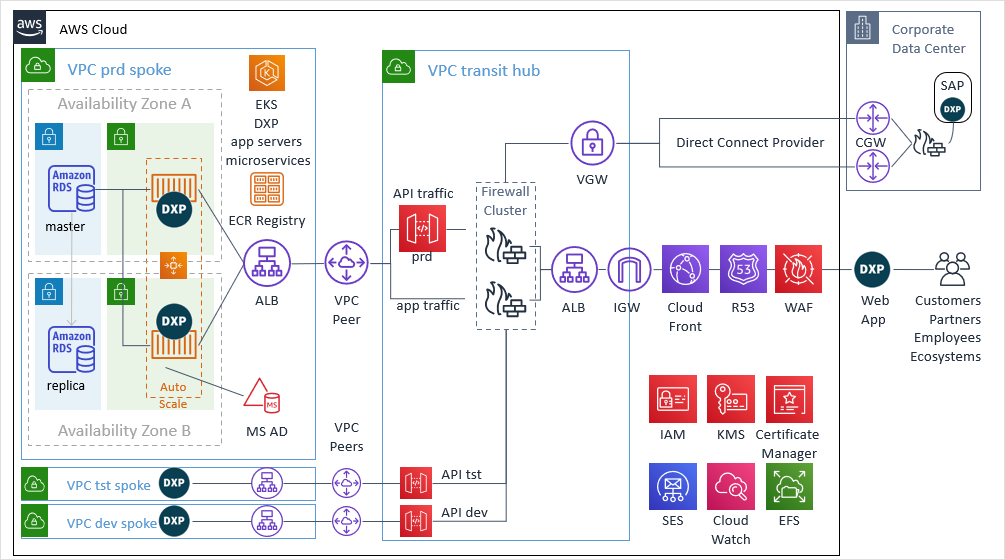

Building on the Case Study: Neptune DXP on AWS apps we can take it to the next level up by using Neptune DXP not only as an application server but also to deliver business services through Neptune DXP microservice deployments and to connect to an SAP/ERP backend.

The introduction of Neptune DXP microservices into the architecture is likely to result in an increased number of auto-scaling Neptune DXP instances. In this case, it may become economically prohibitive and cumbersome to operate on a traditional arrangement using compute always-on resources to host Neptune DXP.

Common patterns to tackle the above are the use of a:

-

Containerised workload orchestration services for hosting Neptune DXP instances

-

Hub & spoke networks using VPC peering to handle environment-specific stacks

-

Clustered corporate firewall services to validate and distribute incoming traffic

-

Direct corporate data centre connectivity to access a backend ERP/SAP system from Neptune DXP application server and/or microservices via the Neptune DXP SAP connector

The matrix below expands on the components used in the Neptune DXP on AWS apps case study offering additional insight on the purpose of each cloud service and how it supports your Neptune DXP application server workload:

Transit Hub Network

- Transit hub network

-

A single region virtual private network (VPC) created to host DPX application servers across a minimum of 2 (or more) availability zone.

Its share services nature means that we can implement an effective network isolation mechanism when operating multiple software development lifecycle environments, achieving a clear separation of concerns between development, testing and production.

- Hub load balancer

-

The Hub load balancer’s use path-based routing to send traffic to the correct spoke or the corporate data centre. For example:

- Firewall cluster

-

Corporate firewall inspecting and validating all API requests for malicious traffic. To achieve high availability a clustered deployment of two or more firewalls should be used.

- API gateway

-

All API requests made by a Neptune DXP application terminate at the API gateway. For each request, a proxy URL is targeted pointing to the backend application load balancer managing the connections to Neptune DXP microservices. For example, a request to POST Orders may target the /orders resources of a Neptune DXP Order microservice at POST serverscript/orders

- Virtual gateway

-

A routing function sitting at the edge of the hub network connecting to the data centre through a direct connect provider.

- VPC peer

-

A network connection enabling the routing of traffic from the hub to a spoke. In our example the hub and spokes are in the same region and part of the same AWS account, however, both can vary depending on the complexity of your technical landscape.

Corporate network

- Corporate date centre

-

Requesting your data centre network. In this case, it is the host to an on-premise SAP installation accessed by Neptune DXP microservices through a secure and private connection implemented using AWS’ Direct Connect service.

- Customer gateway

-

A physical or software appliance installed on-premise in your corporate network to receive traffic from Neptune DXP instances through the AWS Direct Connect service.

- Neptune DXP SAP connector

-

Installed directly in the SAP Application server(s) to handle API requests received from Neptune DXP instances targeting your SAP system.

Spoke Network

- Spoke Network

-

In this case, a spoke is created for each operating environment, that is, development, test and production. The composition of each spoke is the same, although it’s likely that the security makeup and data contents found in a production spoke will vary to the non-production spoke networks.

- Spoke load balancer

-

The spoke load balancer distributes traffic to the Neptune DXP application servers.

- Elastic Kubernetes service

-

A more agile arrangement taking advantage of Neptune DXPs pre-packaged deployment in a Docker image is in adopting a Kubernetes orchestration solution deployed as a cloud service to organise the delivery of both Neptune DXP application server and microservice instances. In this case, we utilise AWS’s Elastic Kubernetes Service (EKS).

- Elastic container registry

-

The Neptune DXP application server is the most important workload in this stack. As it must be highly available new instances will be “spun-up” and “terminated” based on demand and ad-hoc failures. To avoid the manual setup of each new instance we must register our Neptune DXP configuration inside a Docker container image with an AWS elastic container registry service. When a new instance is the required the EKS will reference the registry and retrieve our Neptune DXP container image.

- Neptune DXP instances

-

Dynamically auto-scaled Neptune DXP app servers and microservice instances spun up by the elastic Kubernetes service.

- Microsoft active directory

-

Used as the identity provider for Neptune DXP corporate users and resources. Neptune DXP can be configured to delegate the validation of a user’s credentials to the active directory service and issue Neptune DXP with a token to avoid re-sending the user’s credentials with each request.

Neptune DXP application workloads

- Elastic file service

-

Used to mount a file system to a Neptune DXP instance to enable the download of NPM packages and their associated files. As Neptune DXP instances can be implemented as separate versions of the same NPM packages it is recommended that each Neptune DXP instance segregates access to a file system rather than share the same locations with other services. This avoids coupling and dependency issues that emerge when shared packages are upgraded by one service but not required so by another.

- Simple email service

-

Enabling the configuration of Neptune DXP instances with access to a mail (SMTP) services to generate email messages.